Data Pipeline Optimization

Key Highlights

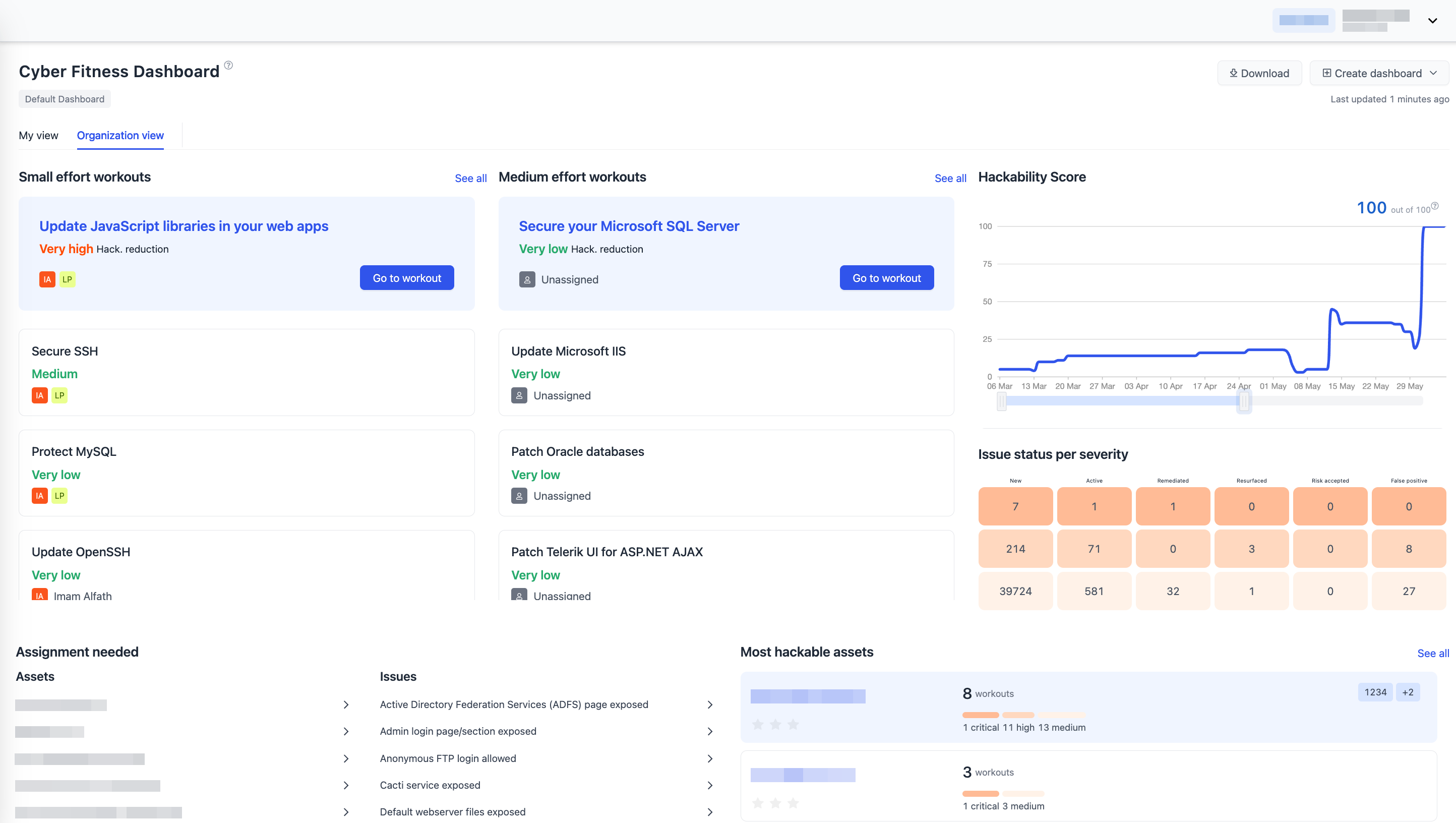

This dashboard aggregates million rows of data. At some point, the pipeline exceed the 1-hour time limit to 7 hours.

I solved the problem by reducing the build time to 30 minutes using time-based segregation.

Data Pipeline Optimization

Problems

As huge data pipeline started getting slow, the engineering team missed the expected 1-hour limit to serve latest data.

Solution

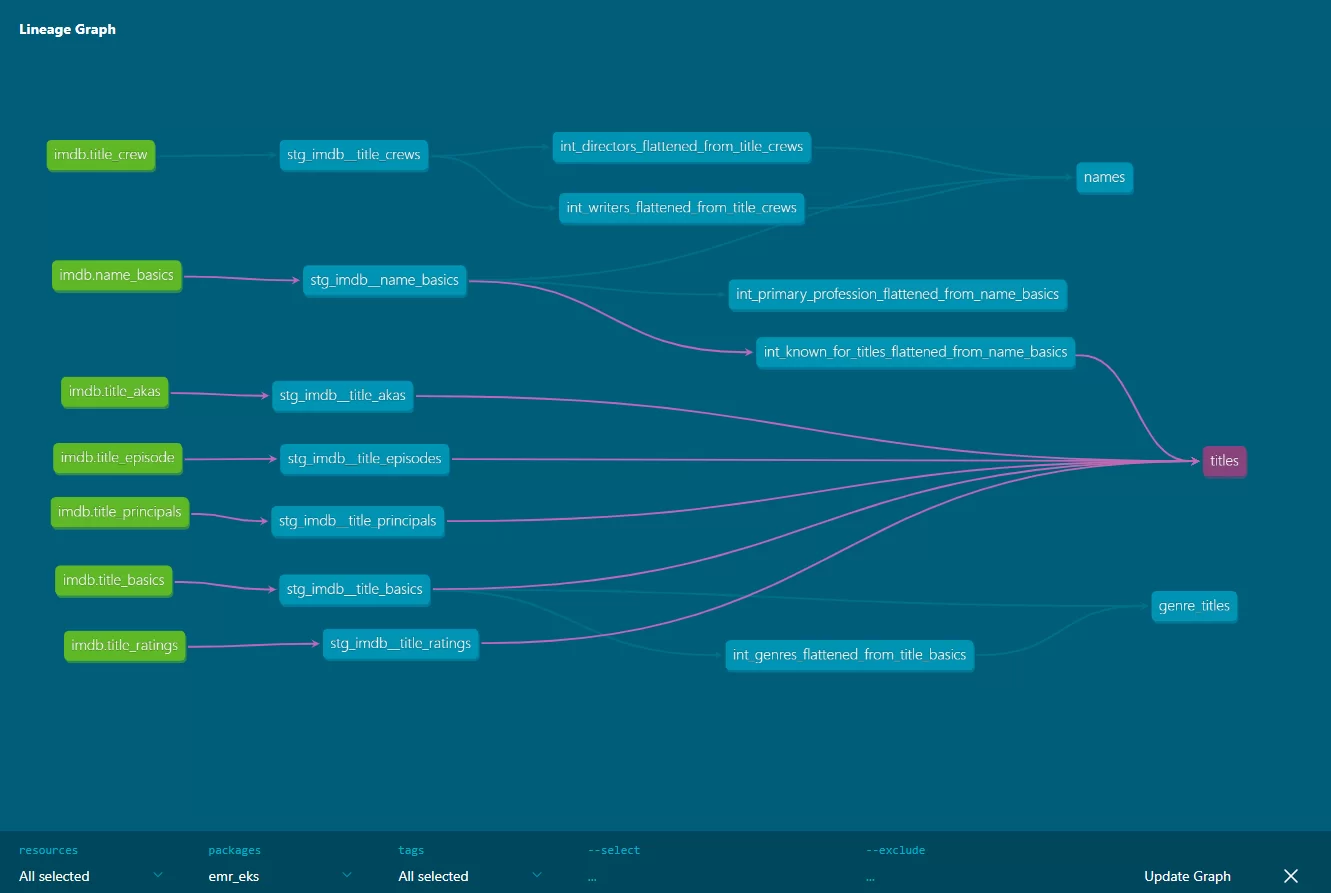

As below illustration shows, by default DBT build the whole data from the first timestamp across all customers.

First, I deep dived and identifed the slowest model. Then I did time-base segregation that enables DBT to have lesser row table to join at once.

After these data were seperated into chunks, I had to rejoin them using UNION ALL that proves its effectiveness.